OpenML.fyi in 2024

I started this newsletter with the goal of “thinking out loud” about the role of open in the brave new world of machine learning. I don’t have a full or succinct answer for that yet, but I do have a much better feel for the space now—so I’m glad I did it.

What next? I’ve found AI news harder to follow (both because of volume and because I have weaned myself from Twitter) but also because I’m personally ready to move from passive-learning towards more active involvement.

The newsletter’s contents will, I expect, reflect that shift—less news, a turn more for the personal and the polemical: what should I do? What should our organizations and movements do?

I realize that won’t be for everyone—no hard feelings if you unsubscribe! But I hope I’ll continue to be interesting, at least.

Upcoming IRL

I’ll be debating open source AI with Paul Ohm, Blake Reid, and Casey Fiesler at Silicon Flatirons on Feb. 4th. If you’re there, please say hi!

The limitations of open licensing: RAIL and porn

Late last year brought another report of a porn-focused startup based on StabilityAI’s RAIL-licensed Stable Diffusion, this time from BellingCat (previously, from 404).

What does this tell us about responsible licensing and its limitations? A few things:

- A responsible license is only as responsible as its licensor. If Stability doesn’t want to enforce the license, the responsibility-focused license terms do very little on their own. This is also true of terms of use, of course. Query whether the Cryptographic Autonomy License’s third-party beneficiary clause, plus the ongoing Vizio litigation, could allow injured third parties to enforce the license.

- Enforcement is hard even if you want to do it. Both CivitAI and AnyDream seem to be deliberately obscuring where they're based—no contact information or jurisdictional information in their privacy or copyright policies, for example. So even if Stability wanted to enforce their license, it’d be hard to do.

- We lack experience controlling criminal acts in an open license. One plank of Stable Diffusion’s variation of the RAIL license prohibits illegal acts. Similar clauses aren’t unusual in commercial contracts, but we have no experience enforcing such clauses in open licensing—especially since the jurisdictional question of “illegal where” is very complex in these cases. So we don’t know how this clause interacts with the allegations of non-consensual pornography, which unfortunately is not illegal everywhere. (You don’t want this to be enforced carelessly; remember there are many jurisdictions where things your Western liberal mind likes may not be legal.)

- Other clauses are vague. While the clause against child pornography is pretty clear, the clause in the license about “harassment” is probably not very useful in this case, since there is no evidence (yet) of the non-consensual aspects of these tools being used to harass people. This is the inevitable result of the (well-intentioned) desire to cram all of human rights law into a one-page summary.

- Lots falls on intermediaries. StabilityAI now distributes Stable Diffusion through two layers of intermediaries—a German university research lab, hosted on Hugging Face. It’s unclear how the license interacts with their obligations. (Related: a good new paper on platform governance for AI intermediaries.)

I continue to give the RAIL folks a lot of slack; they’re trying to do something noble with a very weak legal toolkit. But they should be thinking about how to inform RAIL users of the challenges of the licenses, so that folks start putting in place other layers of enforcement—including, where appropriate, using executed contracts rather than licenses, and budgeting for license enforcement.

Eleuther.ai Pile 2.0 + data pruning

Eleuther.ai were the first project to convince me that actually-open LLMs were viable, and are still the leaders in doing LLMs in a genuinely bottom-up open way. Their 2020 “Pile” was a critical early data set for a lot of projects. With what they’ve learned in the past few years they’re initiating a new version of the Pile—focused, among other things, on improving the amount of information in The Pile that is more clearly licensed: public domain, government works, and CC/OSI-approved licenses.

Given Eleuther’s focus on doing replicable research, I suspect that one way to think about this new version of the Pile is as a way to measure the quality of models built without relying so heavily on fair use. In other words, is the industry’s commonly held belief that you can’t build a good model without fair use accurate? To date, we haven’t really been able to answer that question—because the right data set didn’t exist.

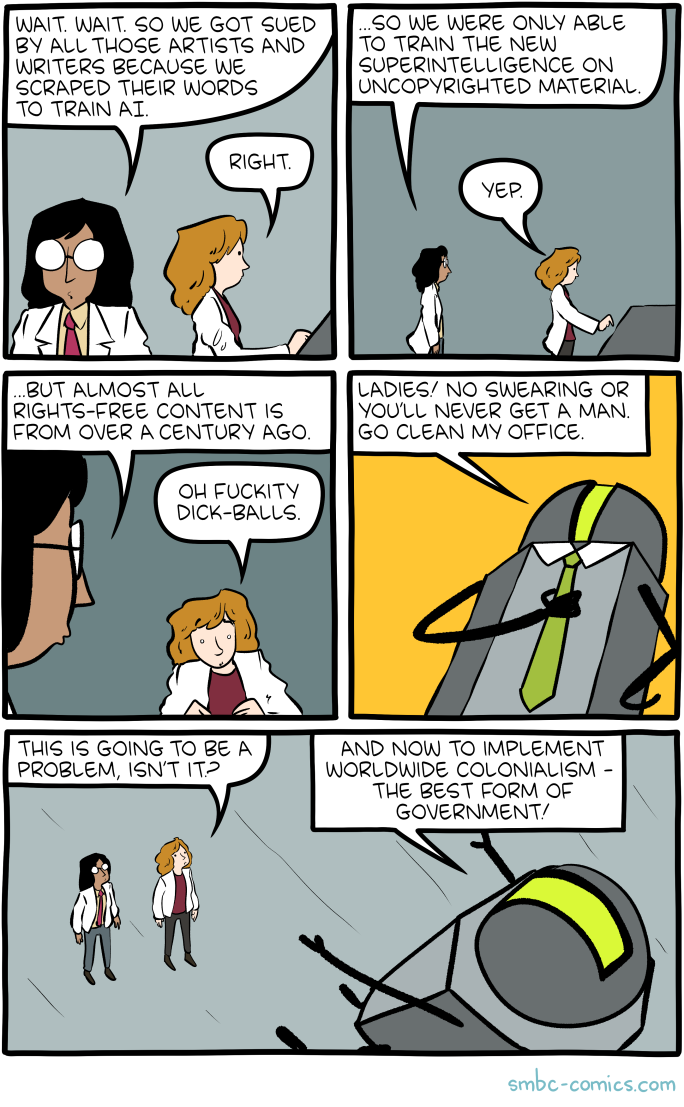

Eleuther: hoping to single-handedly disprove this SMBC comic

Building a data set that takes licensing seriously is one important part of (dis)proving that thesis. Another part will be improving data techniques, so I was interested to see this paper from late last year on “pruning” data sets that suggests that “the majority of pretraining data can be removed while retaining performance”. This technique still requires high-quality data sets, so it doesn’t imply smaller is better, but is another suggestion that the tide may have turned away from “we must amass the largest possible data sets” to “higher-quality, smaller data sets can work”—which suggests open has a fighting chance.

“fairly trained” and the publishing industry

A new non-profit was announced that certifies “fairly trained” models. Couple things to note:

- The press release trumpets that it is a “non-profit” but doesn’t mention that every supporter is an IP publisher—mostly from the music industry. This is an industry organization, not a public-interest non-profit.

- One of their advisors is Maria Pallante, who famously doesn’t know what copyright is for.

- In a small hat-tip to Creative Commons’s successful licensed initialisms (BY, NC, SA, etc.), they say their “first” certification is called “Licensed (L)”.

The industry has never liked the “fair” framing of fair use, but I suspect that the emotional valence of the moment (including the techlash) gives them a real opening to flip the meaning of “fair” in copyright: from fair meaning “use despite not being licensed”, to fair meaning “use when licensed and only when licensed”.

Misc.

- Davos has whitepapers on responsible AI, answering the question “how self-absorbed do you have to be to issue the 1,000th undifferentiated whitepaper on responsible AI”.

- Not open AI per se, but a really interesting story on the intersection of hype, AI, and the law that goes by DoNotPay.

- Apple continues to push inference on more traditional hardware, in this case less RAM. One of the signs that open can punch above its weight: when “doing things on cheap hardware” aligns with the interests of giants, it benefits open.

Member discussion